The field of artificial intelligence has long been dominated by the paradigm of scaling—bigger models, more data, longer training times. Yet a groundbreaking new paper introduces a radically different approach that challenges everything we thought we knew about AI reasoning. The Hierarchical Reasoning Model (HRM) achieves extraordinary performance on complex reasoning tasks using just 27 million parameters and 1000 training examples, outperforming much larger models that rely on chain-of-thought prompting.

Table of contents

Open Table of contents

- The Problem with Current AI Reasoning

- Enter the Brain-Inspired Solution

- How HRM Works

- Revolutionary Training Approach

- Stunning Results That Redefine Expectations

- The Neuroscience Connection

- Visualizing the Reasoning Process

- What This Means for AI’s Future

- Implementation Details

- Getting Started with HRM

- The Paradigm Shift

- Challenges and Future Directions

- Conclusion

The Problem with Current AI Reasoning

Today’s large language models (LLMs) face a fundamental limitation: they’re paradoxically shallow despite their massive scale. Standard Transformers operate at fixed computational depth, placing them in restricted complexity classes that prevent them from solving problems requiring polynomial time algorithms. This is why even the most advanced models struggle with tasks that require extensive logical reasoning, backtracking, or multi-step planning.

The current solution—Chain-of-Thought (CoT) prompting—is essentially a workaround. It externalizes reasoning into sequential text generation, breaking down complex problems into step-by-step linguistic explanations. But CoT has serious limitations:

- Brittleness: A single misstep can derail the entire reasoning process

- Data hunger: Requires enormous amounts of training data

- Inefficiency: Generates many tokens, leading to slow response times

- Dependency on decomposition: Relies on human-defined problem breakdowns

Enter the Brain-Inspired Solution

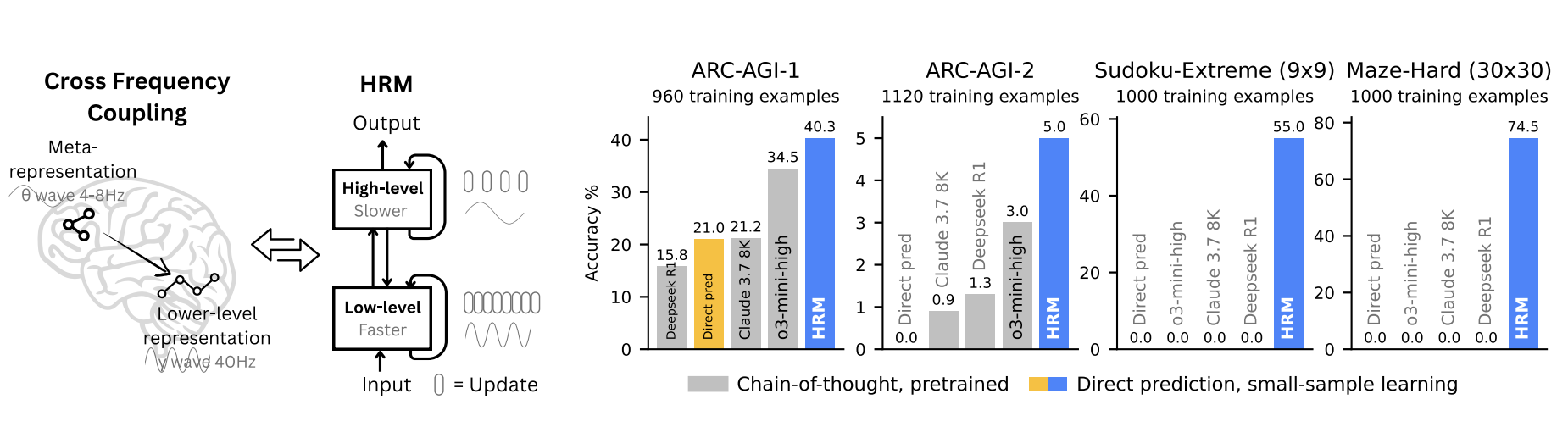

The HRM takes inspiration from three fundamental principles of neural computation observed in the human brain:

Hierarchical Processing: The brain processes information across a hierarchy of cortical areas. Higher-level areas integrate information over longer timescales and form abstract representations, while lower-level areas handle immediate, detailed processing.

Temporal Separation: Different hierarchical levels operate at distinct intrinsic timescales—think slow theta waves (4-8 Hz) guiding fast gamma waves (30-100 Hz). This separation allows stable, high-level guidance of rapid, low-level computations.

Recurrent Connectivity: Extensive feedback loops enable iterative refinement, yielding more accurate representations through additional processing cycles.

How HRM Works

The HRM consists of four key components working in harmony:

- Input Network: Projects raw input into a working representation

- Low-Level Module (L): Handles fast, detailed computations

- High-Level Module (H): Manages slow, abstract planning

- Output Network: Extracts final predictions

The magic happens in the interaction between the L and H modules. The system operates in cycles:

- The L-module performs multiple rapid updates while the H-module remains fixed

- After T timesteps, the L-module reaches local equilibrium

- The H-module incorporates the L-module’s result and updates once

- The L-module resets and begins a new computational phase

This creates what the researchers call “hierarchical convergence”—avoiding the premature convergence that plagues standard recurrent networks while maintaining computational depth.

Revolutionary Training Approach

Traditional recurrent networks require Backpropagation Through Time (BPTT), which demands O(T) memory for T timesteps. HRM introduces a brilliant one-step gradient approximation that:

- Uses only O(1) memory (constant, regardless of sequence length)

- Eliminates the need for BPTT

- Remains biologically plausible

- Can be easily implemented in modern frameworks like PyTorch

def hrm(z, x, N=2, T=2):

x = input_embedding(x)

zH, zL = z

with torch.no_grad():

for _i in range(N * T - 1):

zL = L_net(zL, zH, x)

if (_i + 1) % T == 0:

zH = H_net(zH, zL)

# 1-step grad

zL = L_net(zL, zH, x)

zH = H_net(zH, zL)

return (zH, zL), output_head(zH)The training also incorporates deep supervision—providing feedback at multiple intermediate steps—and Adaptive Computation Time (ACT), allowing the model to dynamically allocate computational resources based on problem complexity.

Stunning Results That Redefine Expectations

The results speak for themselves. With just 1000 training examples and no pre-training, HRM achieves:

ARC-AGI Challenge (Artificial General Intelligence Benchmark)

- HRM: 40.3% accuracy

- OpenAI o3-mini-high: 34.5%

- Claude 3.7 8K: 21.2%

- DeepSeek R1: 21.0%

Sudoku-Extreme (9×9 Complex Puzzles)

- HRM: 55.0% accuracy

- All CoT models: 0% (complete failure)

Maze-Hard (30×30 Optimal Pathfinding)

- HRM: 74.5% accuracy

- All CoT models: 0% (complete failure)

These aren’t marginal improvements—they’re paradigm-shifting breakthroughs that demonstrate the power of architectural innovation over brute-force scaling.

The Neuroscience Connection

Perhaps most remarkably, HRM spontaneously develops organizational principles that mirror the human brain. The researchers discovered that trained HRM models exhibit a dimensionality hierarchy similar to mouse cortex:

- The high-level module operates in high-dimensional space (Participation Ratio: 89.95)

- The low-level module uses more constrained representations (Participation Ratio: 30.22)

- This hierarchy emerges from training, not architectural design

This suggests HRM has discovered fundamental organizational principles that biological systems use for flexible reasoning.

Visualizing the Reasoning Process

One of the most fascinating aspects of HRM is its interpretability. Researchers can visualize the model’s intermediate reasoning steps:

Maze solving: HRM explores multiple paths simultaneously, eliminates blocked routes, and iteratively refines solutions—similar to breadth-first search with pruning.

Sudoku strategy: The model employs depth-first search with backtracking, showing human-like problem-solving strategies where it explores potential solutions and backtracks when hitting dead ends.

ARC tasks: Incremental improvements through hill-climbing optimization, making progressive adjustments to reach the final solution.

This transparency offers unprecedented insight into how neural networks can implement complex reasoning algorithms.

What This Means for AI’s Future

The implications of HRM extend far beyond impressive benchmark scores:

Efficiency Revolution

HRM proves that architectural innovation can dramatically reduce computational and data requirements. This opens possibilities for deploying sophisticated reasoning systems on resource-constrained devices.

True Algorithmic Learning

Unlike CoT models that rely on linguistic decomposition, HRM learns to execute complex algorithms directly in latent space, approaching practical Turing-completeness.

Biological Plausibility

The brain-inspired design and biologically plausible training mechanisms offer insights into how natural intelligence might work and how we can build more robust AI systems.

Beyond Language-Centric AI

HRM demonstrates that reasoning doesn’t need to be mediated through language tokens, potentially unlocking more efficient and powerful forms of machine cognition.

Implementation Details

For those interested in the technical implementation:

- Architecture: Encoder-only Transformer blocks for both L and H modules

- Enhancements: Rotary Positional Encoding, Gated Linear Units, RMSNorm

- Optimization: Adam-atan2 optimizer with constant learning rate and linear warm-up

- Memory: O(1) memory footprint regardless of sequence length

- Training: Deep supervision with detached gradients between segments

Getting Started with HRM

The research team has made their implementation available:

git clone https://github.com/sapientinc/HRM

cd HRM

pip install -r requirements.txt

python train.py --task sudoku --examples 1000The codebase includes implementations for all three benchmark tasks (ARC-AGI, Sudoku-Extreme, Maze-Hard) and provides easy-to-use training scripts.

The Paradigm Shift

HRM challenges the prevailing wisdom that bigger is always better in AI. Instead, it demonstrates that:

- Architecture matters more than scale

- Brain-inspired design principles can be practically implemented

- Small, efficient models can outperform giants

- Reasoning can be learned directly, not just externalized

This work opens a new chapter in AI research, suggesting that the path to artificial general intelligence may lie not in scaling existing approaches, but in fundamentally rethinking how we build reasoning systems.

Challenges and Future Directions

While HRM represents a major breakthrough, several areas warrant further investigation:

- Scalability: How does performance scale with even larger, more complex problems?

- Generalization: Can HRM transfer reasoning skills across different domains?

- Integration: How might these principles combine with existing language model architectures?

- Understanding: What other algorithmic strategies might emerge from this framework?

Conclusion

The Hierarchical Reasoning Model represents more than just another incremental improvement in AI capabilities. It’s a proof of concept that different approaches to intelligence—inspired by biological systems and grounded in solid computational principles—can achieve remarkable results with modest resources.

As we stand at a potential inflection point in AI development, HRM offers a compelling alternative to the current paradigm of ever-larger language models trained on ever-more data. It suggests that the future of AI reasoning may be more elegant, efficient, and biologically inspired than we imagined.

The implications ripple far beyond academic research. From edge computing applications to more interpretable AI systems, from energy-efficient reasoning to new insights into biological intelligence, HRM opens doors to possibilities we’re only beginning to explore.

The age of hierarchical reasoning has begun. And it’s starting with just 27 million parameters and the wisdom of the human brain.

Paper: Hierarchical Reasoning Model (arXiv:2506.21734)

PDF: Direct PDF Link

Code: github.com/sapientinc/HRM